Every integrated circuit (IC) has a lifetime of stories to tell. From design through the end of a chip’s life, it can let us know what’s happening all along the way, providing we give it a voice and the language to do so. But until we can gain access to this data, the lives of these ICs remain secret. In-chip monitoring opens up those secrets. It helps to optimize performance, and it is especially useful for applications where failure is not an option. We can make sure each chip operates within its intended range, and, if it starts to degrade over time, replace it before failures occur.

You might argue that much of this data is already available without ongoing monitoring. From design data to manufacturing test data, there are reams of numbers that exist, and those numbers tell a valuable story. But those pools of data are all isolated in different places, are expressed in different terms, and are incomplete, making it practically impossible to learn things from them. Moreover, they are circumstantial and don’t directly indicate an event or phenomenon, but rather that event’s effect on something else (e.g. rise in temp as an indication of a problem). And, of course, they say nothing about the device while it’s operating.

A real-time in-chip monitoring system, for use during test and live operation, provides visibility and a common language for assessing the health and performance of the chip throughout its entire lifecycle.

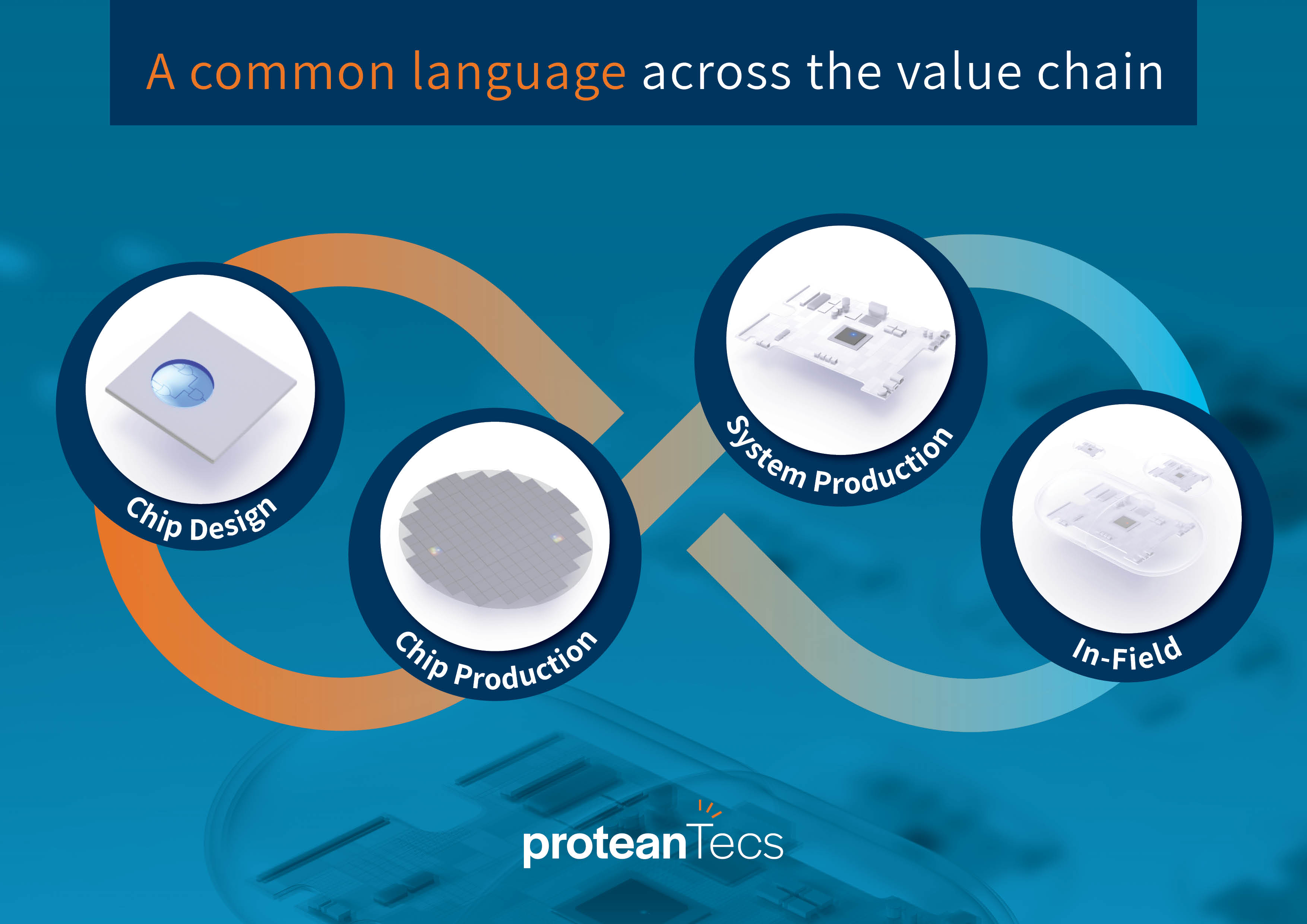

There are three siloed phases to the life of an integrated circuit: chip design, manufacturing (chip and system), and in-field operation. Each one has the potential to tell us something about the chip. Two of them already do, with significant limitations.

The design phase tells us nothing about a specific chip, but it does set expectations for how the chip should perform, along with expected margins. This data exists in a format created and consumed by chip design tools.

The chip is then manufactured and inserted into a system. Chips are tested before being sold, and systems embedding these chips may undergo many levels of test — module test, board test, rack test, system test, system-of-systems test. All of these tests result in the collection of data.

Chip test, both at wafer sort and final test, can create large volumes of data. But from a practical standpoint, tests need to be completed quickly, so many of the tests operate on a pass/fail basis without actually reporting numbers. A signal delay, for example, is tested by sampling the signal at the time limit to see if the signal transition has completed correctly. If it has, then the test passes — without measuring when the transition actually happened. You know only that it was fast enough. You don’t know how fast it was. You can get more detailed data by data logging, but we don’t typically do that during production because it takes too long. And any data, detailed or not, will be in the format of the chip tester.

System tests operate similarly, except that you can access even less information about the chip because it’s no longer testable in isolation. Pins are connected to other components on the board, which limits what we can measure. Data from these tests is certainly available, but it’s less helpful for assessing the chip itself. By contrast, internal chip data, if it were available, could provide insights not only into its own operation, but also into the parts of the system that interact with the chip. As it stands today, however, any recorded data reflects only established system test points, and it is in the format of the various system test machines. These are likely to be different from each other, and they’re definitely different from the chip tester. All in all, the various stages of manufacturing create several mini-silos of data, each with its own format.

Finally, there is real-time operation, and today no data is available for this phase. Data taken here can provide key insights into how the system and its environment affect the chip. Absent that data, if the system is going to fail, you won’t know until it actually does fail.

So we have two opportunities for data monitoring — manufacturing and in the field, along with a baseline established at design. Existing manufacturing data formats are all different, and none of them are compatible with the baseline. The field monitoring opportunity remains unfulfilled.

proteanTecs addresses this by comprehensively learning and analyzing the chip’s design and creating and inserting Agents during the design phase. The Agents continuously create novel data, which is uploaded and inferred by accompanying algorithms in a server that transform the measurements into meaningful intelligence. The Agents accompany the chip throughout its entire lifetime — during production, system integration and operation in-field to monitor the electronic system’s health and performance. Communication from the chip to the server, in the cloud or a server farm, takes place through the test equipment or through the communication mechanism built into the end system.

Instead of having separate partial data silos, all the data — expected and actual — resides within the same repository. Because all the data is taken by the same physical sensors and inferred in the machine learning data analytics system, it is all in a common language and can therefore be cross-linked for margins, trends, and aging. This newly created language is based on intrinsic correlation, providing feedback and feed-forward insights for new levels of visibility across the value chain. It allows the server algorithms to alert on faults by following trends across the history of the chip, so that any impending issues are addressed before a failure occurs.

proteanTecs lets those chips tell their stories throughout their lifetime. Designers, production engineers, and service providers can monitor the life events, major and minor, intervening and taking action when needed.